Autonomous robots can inspect nuclear power plants, clean up oil spills in the ocean, accompany fighter planes into combat and explore the surface of Mars.

Yet for all their talents, robots still can’t make a cup of tea.

That’s because tasks such as turning the stove on, fetching the kettle and finding the milk and sugar require perceptual abilities that, for most machines, are still a fantasy.

Autonomous robots can inspect nuclear power plants, clean up oil spills in the ocean, accompany fighter planes into combat and explore the surface of Mars.

Yet for all their talents, robots still can’t make a cup of tea.

That’s because tasks such as turning the stove on, fetching the kettle and finding the milk and sugar require perceptual abilities that, for most machines, are still a fantasy.

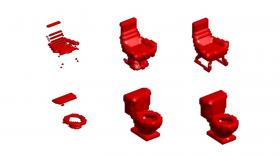

Among them is the ability to make sense of 3-D objects. While it’s relatively straightforward for robots to “see” objects with cameras and other sensors, interpreting what they see, from a single glimpse, is more difficult.

Duke University graduate student Ben Burchfiel says the most sophisticated robots in the world can’t yet do what most children do automatically, but he and his colleagues may be closer to a solution.

Read more at Duke University

Image: When fed 3-D models of household items in bird's-eye view (left), a new algorithm is able to guess what the objects are, and what their overall 3-D shapes should be. This image shows the guess in the center, and the actual 3-D model on the right.

Image Credit: Courtesy of Ben Burchfiel