The chips in most modern desktop computers have four “cores,” or processing units, which can run different computational tasks in parallel. But the chips of the future could have dozens or even hundreds of cores, and taking advantage of all that parallelism is a stiff challenge.

Researchers from MIT’s Computer Science and Artificial Intelligence Laboratory have developed a new system that not only makes parallel programs run much more efficiently but also makes them easier to code.

The chips in most modern desktop computers have four “cores,” or processing units, which can run different computational tasks in parallel. But the chips of the future could have dozens or even hundreds of cores, and taking advantage of all that parallelism is a stiff challenge.

Researchers from MIT’s Computer Science and Artificial Intelligence Laboratory have developed a new system that not only makes parallel programs run much more efficiently but also makes them easier to code.

In tests on a set of benchmark algorithms that are standard in the field, the researchers’ new system frequently enabled more than 10-fold speedups over existing systems that adopt the same parallelism strategy, with a maximum of 88-fold.

For instance, algorithms for solving an important problem called max flow have proven very difficult to parallelize. After decades of research, the best parallel implementation of one common max-flow algorithm achieves only an eightfold speedup when it’s run on 256 parallel processors. With the researchers’ new system, the improvement is 322-fold — and the program required only one-third as much code.

Continue reading at Massachusetts Institute of Technology (MIT)

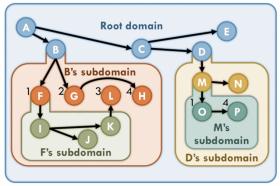

Image: A new system dubbed Fractal achieves 88-fold speedups through a parallelism strategy known as speculative execution. Courtesy of the researchers (edited by MIT News)